Studio Recording

Compressor distortion in the Logic Pro X Compressor plugin

My research into comparison of distortion characteristics of the the seven compressor types in the Logic Pro X Compressor.

Your browser does not support viewing this document. Click here to download the document.

Focusrite Red 3 and Logic Pro X "Studio VCA" plugin distortion compared

| a_short_comparison_of_the_focusrite_red_3_and_logic_studio_vca.pdf | |

| File Size: | 4775 kb |

| File Type: | |

10 common Mix mistakes (SOS magazine)

http://www.soundonsound.com/sos/sep11/articles/mix-mistakes.htm

http://www.soundonsound.com/sos/sep11/articles/mixmistakesreadinglist.htm

http://www.soundonsound.com/sos/sep11/articles/mixmistakesreadinglist.htm

The Art of Mixing

Two Swiss Army Knives (audio editors)

A long time ago (when the word 'digital' was still magical) the digital audio editor programme was invented. The most popular was Cool Edit Pro (which grew into Adobe Audition). The reason we don't hear much about these now is that since we now have fully developed DAWs the separate editor is redundant. However, I still find it handy sometimes to use one for odd jobs, and there are two that are amazing for what they offer for the price (which btw is free).

Audacity - this popular software offers pretty much all the functions of a DAW, and more, such as editing file metadata, and generating DTMF tones. We could be waiting a long time for Pro Tools to offer that feature. The list of internal effects is large, and it also accesses any AU plugins.

Wavepad - also offers a good range of FX, file formats etc. There are more editing options than Audacity but fewer processing options (as it uses only the internal plugins). There is an RTA window, and click reduction. It also has an online sound library.

Don't expect Waves type sound quality from either (although you might find something interesting from the DSP artefacts), but because these programmes are tiny they can edit or process nice and fast.

Audacity - this popular software offers pretty much all the functions of a DAW, and more, such as editing file metadata, and generating DTMF tones. We could be waiting a long time for Pro Tools to offer that feature. The list of internal effects is large, and it also accesses any AU plugins.

Wavepad - also offers a good range of FX, file formats etc. There are more editing options than Audacity but fewer processing options (as it uses only the internal plugins). There is an RTA window, and click reduction. It also has an online sound library.

Don't expect Waves type sound quality from either (although you might find something interesting from the DSP artefacts), but because these programmes are tiny they can edit or process nice and fast.

Mixing by Eye

Almost all mixdowns are performed on a DAW these days. The availability of loads of FX and signal processors, automation, and powerful editing are the reasons that all but the analogue purist will use Pro Tools or an equivalent programme. However, it is not all good news. It is easy to get bogged down in the sheer amount of choice and ultra-fine editing that can be achieved. I think the saying "can't see the wood for the trees" was intended for 'in the box' mixing.

One other consideration is that when we use a computer it is primarily a visual tool. Much of what we do relies heavily or exclusively on visual feedback (eg driving a car, drawing, reading) so it should come as no surprise that our brains are biased to focus mostly on what we see. Blind people have acute hearing (actually better ability to 'listen') not only because they have to, but also because without visual distractions, they can.

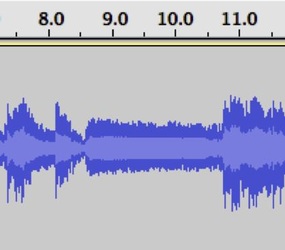

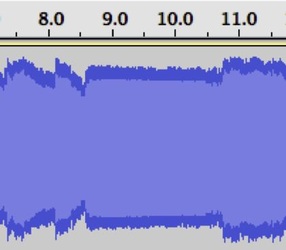

When it comes to working with sound, this can be a real drawback. Firstly, our brains can only cope with so much information at a time, so without extra concentration, the on-screen information will mask some of the aural information. Secondly, if (like me) you are a strong visual learner then you will find it hard to ignore the graphic aspects of the DAW. For example, untidy automation data can be 'improved' by having straight lines etc, whereas a messy curve may sound better. Here is another example: DAWs show the audio waveform on a normalised scale of magnitude, whereas we hear it logarithmically. Look at the pictures below. These are a portion of the same audio track. On the left is the usual way of representing the waveform, and on the right the X axis has been changed to dB. DAWs (such as Pro Tools, Logic Pro) have a normalised scale of ±1 or ±100 (ie %) so that the amplitude is showing the relative voltage that will appear on the analogue output. In this example the soft passage of music in the middle of the picture is at 0.5 and the loud section is at 1. This makes the difference seem large, and as a graphic type I am tempted to even this up by compressing the track. Now look at the same piece when the magnitude scale is changed to dB. The big variation disappears, as the mid- section level drops by 3dB, which is quite a small amount (and in fact the dynamic range of this track is perfect). Here the eye and the ear are in agreement. Not all audio software lets the scale be changed, and in any case we would have to train our ears to over-rule our eyes, because the graphic on the left certainly looks more interesting. When purchasing, we use the saying 'Caveat emptor', when mixing we need to be thinking 'let the perceiver beware'.

One other consideration is that when we use a computer it is primarily a visual tool. Much of what we do relies heavily or exclusively on visual feedback (eg driving a car, drawing, reading) so it should come as no surprise that our brains are biased to focus mostly on what we see. Blind people have acute hearing (actually better ability to 'listen') not only because they have to, but also because without visual distractions, they can.

When it comes to working with sound, this can be a real drawback. Firstly, our brains can only cope with so much information at a time, so without extra concentration, the on-screen information will mask some of the aural information. Secondly, if (like me) you are a strong visual learner then you will find it hard to ignore the graphic aspects of the DAW. For example, untidy automation data can be 'improved' by having straight lines etc, whereas a messy curve may sound better. Here is another example: DAWs show the audio waveform on a normalised scale of magnitude, whereas we hear it logarithmically. Look at the pictures below. These are a portion of the same audio track. On the left is the usual way of representing the waveform, and on the right the X axis has been changed to dB. DAWs (such as Pro Tools, Logic Pro) have a normalised scale of ±1 or ±100 (ie %) so that the amplitude is showing the relative voltage that will appear on the analogue output. In this example the soft passage of music in the middle of the picture is at 0.5 and the loud section is at 1. This makes the difference seem large, and as a graphic type I am tempted to even this up by compressing the track. Now look at the same piece when the magnitude scale is changed to dB. The big variation disappears, as the mid- section level drops by 3dB, which is quite a small amount (and in fact the dynamic range of this track is perfect). Here the eye and the ear are in agreement. Not all audio software lets the scale be changed, and in any case we would have to train our ears to over-rule our eyes, because the graphic on the left certainly looks more interesting. When purchasing, we use the saying 'Caveat emptor', when mixing we need to be thinking 'let the perceiver beware'.

Changes in Recording

Recording Engineers and Producers talk at Church Studios, London. Hosted by George Shilling of record production.com this hour long video covers topics such as big studios vs home studios, changes to engineer employment and types of work, and some other stuff.

Isolated tracks

There are quite a few copies of classic tracks multitrack masters floating about on the Internet these days. Almost certainly they are illegal and have been released out the back door by some "enterprising" studio person. I'm not advocating that they should be freely available, but since they are it can be educational to study how the songs were put together. Many are straight-forward in terms of track allocation (but a common twist is that a single track gets used for more than one part (eg vocal and a guitar) due to the limited track count - especially on 4, 8, and even 16 track tapes. Some are more interesting, for example Bohemian Rhapsody (which had lots of 'stems' but was eventually reduced to 24 track) has 3 tracks allocated to bass. There is a single bass part but it is DI'd, close miked, and distant miked. This was the custom of Roy Thomas Baker, the Producer.

Another great example to study is Steve Wonder's 'Superstition'. It was recorded on 16 tracks and a whopping 8 of those are Clavinet. Drums get only 3 tracks - kik, and stereo overheads. Exactly what the Clav part is has vexed keyboard players since the song came out, but a careful listen to the multitrack reveals the secret. There are only two played parts - the main riff, and an accompanying part. Each of these has a delayed version. There is also a double of the accompanying part (giving 3 tracks for this part), and four doubles of the main riff (5 tracks). According to Cecil Malcolm, who engineered and co-produced the song, these were all used in the mix as 4 'stereo' pairs. The D6 Clavinet is mono so either they were split onto multiple tracks and recorded with different EQ, or the instrument was miked up in stereo. In any case Steve played two parts, not eight. Even so, they are busy and this is why even he cannot get the exact sound on the album, when playing it live.

Another great example to study is Steve Wonder's 'Superstition'. It was recorded on 16 tracks and a whopping 8 of those are Clavinet. Drums get only 3 tracks - kik, and stereo overheads. Exactly what the Clav part is has vexed keyboard players since the song came out, but a careful listen to the multitrack reveals the secret. There are only two played parts - the main riff, and an accompanying part. Each of these has a delayed version. There is also a double of the accompanying part (giving 3 tracks for this part), and four doubles of the main riff (5 tracks). According to Cecil Malcolm, who engineered and co-produced the song, these were all used in the mix as 4 'stereo' pairs. The D6 Clavinet is mono so either they were split onto multiple tracks and recorded with different EQ, or the instrument was miked up in stereo. In any case Steve played two parts, not eight. Even so, they are busy and this is why even he cannot get the exact sound on the album, when playing it live.

Dynamic Processors

| dynamics_control_101.pdf | |

| File Size: | 50 kb |

| File Type: | |

When should I Gate?

Gates can be used in all sorts of ways (eg to cut the noise on a walkie-talkie when there is no transmission), but they really come into their own when the situation is a multitrack recording. The prime candidates for gates are toms and snare drum due to the leakage from other parts of the kit (eg HH). Electric guitar usually needs gated too because of the hiss from the amplifier and stomp boxes.

The question I want to ask here is "Is it better to gate during recording, or at mix down?"

Without thinking too hard about it, most people gate at mix down. The advantages here are that there is no danger of irreversibly clipping the drum envelop, and in any case there is enough to do in a tracking session. In the old days when rack units were expensive and therefore not too plentiful, a good reason to gate drums during recording was so the same unit could be reused (say for guitar parts) at mix down. The same approach was used for Reverb, FX, EQ and so on. That reason has mostly gone these days thanks to plug-ins, and cheaper hardware. So why is it worth considering gating during tracking? There are two advantages: firstly, even a pro will take a minute or two to set a gate up, as care is required. It is a balancing act between getting the gate to lock what is not wanted, and not affecting the wanted sound. Often a compromise will between the two, and careful setting of the attack and range controls will be required, after basic gating is achieved. How can this be a benefit? Well, it slows the process down and the Engineer must be in full control of the session (ie the musicians) to perform such a task. what is required is slow, even hits on each drum. This is a good way to establish control of the session (not in a dictatorial way), and make musicians aware of the fact that recording is a very slow process compared with live performance. It is not unusual for bands not familiar with multitrack recording to have no real understanding of what is involved, and therefore greatly underestimate how long things will take to setup. Setting the gates on toms and snare is done early on (just after the line checks) so it is a good time to establish good communication and procedures with the band. As a side benefit, it forces the Engineer to be absolutely focused on each drum sound individually so there should be no surprises come mixdown day. Secondly, by gating at tracking we get a payoff at mix down. Having considered the drum sounds to the point of committing the gate to them, the mixdown becomes easier. When the tracks are brought up and you solo the parts they are already 'in the ball park'. This allows us to make good sounds great, rather than fighting with sounds (some of which end up only 'usable in the mix). I would sooner have a mixdown that was 12 hours of enjoyment than one that was a 12 hour chore.

The question I want to ask here is "Is it better to gate during recording, or at mix down?"

Without thinking too hard about it, most people gate at mix down. The advantages here are that there is no danger of irreversibly clipping the drum envelop, and in any case there is enough to do in a tracking session. In the old days when rack units were expensive and therefore not too plentiful, a good reason to gate drums during recording was so the same unit could be reused (say for guitar parts) at mix down. The same approach was used for Reverb, FX, EQ and so on. That reason has mostly gone these days thanks to plug-ins, and cheaper hardware. So why is it worth considering gating during tracking? There are two advantages: firstly, even a pro will take a minute or two to set a gate up, as care is required. It is a balancing act between getting the gate to lock what is not wanted, and not affecting the wanted sound. Often a compromise will between the two, and careful setting of the attack and range controls will be required, after basic gating is achieved. How can this be a benefit? Well, it slows the process down and the Engineer must be in full control of the session (ie the musicians) to perform such a task. what is required is slow, even hits on each drum. This is a good way to establish control of the session (not in a dictatorial way), and make musicians aware of the fact that recording is a very slow process compared with live performance. It is not unusual for bands not familiar with multitrack recording to have no real understanding of what is involved, and therefore greatly underestimate how long things will take to setup. Setting the gates on toms and snare is done early on (just after the line checks) so it is a good time to establish good communication and procedures with the band. As a side benefit, it forces the Engineer to be absolutely focused on each drum sound individually so there should be no surprises come mixdown day. Secondly, by gating at tracking we get a payoff at mix down. Having considered the drum sounds to the point of committing the gate to them, the mixdown becomes easier. When the tracks are brought up and you solo the parts they are already 'in the ball park'. This allows us to make good sounds great, rather than fighting with sounds (some of which end up only 'usable in the mix). I would sooner have a mixdown that was 12 hours of enjoyment than one that was a 12 hour chore.

Recording the Beatles

- EMI manufactured almost all equipment. Mics were the exception (these were originally STC and RCA, later AKG and Neumann)

- EMI modified ALL other brands of equipment. As a minimum, items were converted to 200Ω impedance.

- Sgt Peppers was recorded on 4 tracks (bouncing between them). Two 3M 8 tracks were at Abbey Road but not yet tested by the technical dept. In any case they could not be used for overdubs or punch-ins as the mixer was only designed with 4 track recording in mind.

- Ringos drums (up to 1967) were recorded using an AKG D20 on kick and one D19 as an overhead mic.

- Varispeed playback was used extensively. This was manually controlled using a test oscillator.

- There were three echo chambers at Abbey Road that were used on most recordings. There were also plate reverbs but the natural reverb of the echo chambers was preferred.

- Lennon and Harrison secretly went to Phil Spector to Produce ‘Let it Be’. George Martin was not impressed with this deal or the result.

- Several house Engineers were employed on Beatles recordings. Most of the first ones were done by Norman Smith, later ones by Geoff Emerick.

- The Balance Engineer operated the mixer and hand limited technical knowledge.

- The Technical Engineers (Amp Room) plugged in ALL equipment needed for a session (even one patchcord!), and setup the studio mics according to the plan given to them by the Balance Engineer.

- Signal Processors were ordered before a session and wheeled in on a trolley. The Amp Room did this.

- There were also design engineers (the Lab) who created new equipment and acoustic solutions.

- Each session had a Tape Op. Initially this position was simple to operate the recorder and log the takes. As multitrack came along it became more involved, and this position eventually became Assistant Engineer.

- Session setups were done as follows: mic and signal processing setup (and line tests) was the job of the Technical Engineers.

The Tape Op operated the tape

The Balance Engineer recorded the session.

A Technical Engineer was in the control room to provide whatever equipment was requested, and do all the patching.

George Martin did the production, including musical arranging and session work on keyboards.

- The reason the Beatles got signed to Parlophone (EMI) was that Brian Epstein was so persistent. George Martin said he eventually gave in but would not have signed them if he knew every other recording company had turned them down.

- ADT was invented for the Beatles by Ken Townsend. It used the Studer J37 (1” 4 track) recorder which could play from the sync head and play head simultaneously, and an EMI BTR2 also. A varispeed could put this second version of the signal slightly after or before the main one (about 40mS was typical).

- Abbey Road was the worlds first purpose built studio, started in 1931 when The Grammophone Company merged with Columbia to form EMI (Electric & Musical Industries)

- Studio 2, where the Beatles were recorded, was the middle sized of the three studios. In fact it was large (60’ x 37’ x 28’).

- Operational Staff progressed strictly as follows: Tape Library -> Tape Op -> Disc Cutting ->Balance Engineer

- Abbey Road had virtually every keyboard instrument and most of these were used at some time.

- In 1967 the Beatles employed “Magic Alex” to oversee the building and equipment for their own studio – Apple. He had 8 speakers in the control room (one per track), and there was no cabling between the studio and control room!

- Mixes from other some of the studios (eg Trident) sounded dull when played back on the flat EQ’d systems at EMI.

- The Beatles first album (Please, Please Me, 1963) was recorded in one day (9 hrs). This was nothing unusual for the time. In contrast, Sgt Peppers (1967) took 700 hours to complete.

- The maximum time for a recording session at Abbey Road was 3 hours. On the later albums, The Beatles broke the rules by starting their recording ‘day’ at 7pm, and sometimes going all night.

- The early recordings were done in “twin track” (rhythm=L, vox=R) and then combined to mono. The US record companies released these as stereo versions!

- All tape splicing was done with scissors, not razor blades.

- Early recordings used speakers for studio monitoring. Headphones were not introduced until 1966.

- The Beatles 4 track recordings started in 1964. Track allocation was almost always:

1. Rhythm (drums, bass, guitar)

2. Guitar (lead & sometimes rhythm)

3. Vocals

4. Vocals and/or other instruments

- In 1966 the Beatles stopped touring to concentrate entirely on studio recording. This was a first.

- Numerous takes were done on the later songs (up to 70!). The best parts of different takes were sometimes spliced together.

source: Recording the Beatles - Kevin Ryan and Brian Kehew.

Zen and the Art of Producing

This book by Eric Sarafin contains lots of good advice on producing a band recording. Of particular interest is his recording philopsophy:

"Making a record requires discipline - today more than at any other time in the history of recording. In fact, the progression of recording technology has been one that consistently shifts commitment to later in the process, to the point that producerless bands find themselves mixing in mastering sessions with stems. The ease with which you can change your mind, while seemingly a positive technological advance, is nothing short of a hindrance where effective record-making is concerned."

Clearly, he believes in the importance of the tracking session; getting the sounds right at the source, and not being afraid to commit. I second that.

"Making a record requires discipline - today more than at any other time in the history of recording. In fact, the progression of recording technology has been one that consistently shifts commitment to later in the process, to the point that producerless bands find themselves mixing in mastering sessions with stems. The ease with which you can change your mind, while seemingly a positive technological advance, is nothing short of a hindrance where effective record-making is concerned."

Clearly, he believes in the importance of the tracking session; getting the sounds right at the source, and not being afraid to commit. I second that.

Multi-track mix downloads

Mike Senior (who writes the Mix Rescue column in SOS magazine) has written a book "Mixing Secrets for the Small Studio", and has a website with lots of multitrack recordings available to download for mixing practice purposes.

Album Credits

This website is a database of who did what on recordings (singers, musicians, producers, engineers, songwriters, and so on).

Mixerman

Yes, he is a real recording engineer (Eric Sarafin). Hear him talk here. Some good advice it is too. I liked this bit: "I have a long hatred with Pro Tools over the years…" (he uses Logic). The term "2 buss" comes up a bit (translated this is the stereo or mix buss). You can check out his myspace page here. And referring to his book 'The Adventures of Mixerman'... in his own words, "everything in the book is from my experiences".

The book is worth reading (but be prepared for some very coarse language) and here is how Mixerman sums it all up after 50 days of harrowing recording adventure:

"Herein lies the universal truth of this diary. No matter what you choose to do in life, regardless of your dedication to excellence or your commitment to your own sanity, you will always be at the mercy of the idiots who surround you. No matter how much you love your work, there will always be others trying to make it a chore, for they are of the ilk that believe that work should actually be work. And if I may personally serve as a lesson for anything whatsoever, let it be this - choose those who surround you carefully, for it has been well established that you will always become a product of your own environment."

Aye, that be true.

The book is worth reading (but be prepared for some very coarse language) and here is how Mixerman sums it all up after 50 days of harrowing recording adventure:

"Herein lies the universal truth of this diary. No matter what you choose to do in life, regardless of your dedication to excellence or your commitment to your own sanity, you will always be at the mercy of the idiots who surround you. No matter how much you love your work, there will always be others trying to make it a chore, for they are of the ilk that believe that work should actually be work. And if I may personally serve as a lesson for anything whatsoever, let it be this - choose those who surround you carefully, for it has been well established that you will always become a product of your own environment."

Aye, that be true.

Audio connector characteristics

| audio_connector_comparison_chart.pdf | |

| File Size: | 72 kb |

| File Type: | |

Proximity Effect

To understand proximity effect we need to first know how a directional mic works:

A directional mic works on the pressure gradient of the soundwave. The delay from the front to the back of the diaphragm causes a phase difference, with a corresponding pressure difference.

As the high frequencies have a shorter wavelength, the pressure difference will be greater (for the same spl). This means the mic output will be rising at 6dB/oct.

To compensate for this the output transformer will also add a -6dB/oct response, so the final ƒ response from the mic is flat.

Up close (but not dangerous):

At very close distances to the mic the phase difference becomes overpowered by amplitude difference. This will be noticeable at the low frequencies as the pressure gradient is relatively small.

For example, the sound source may be 3mm from the front and 21mm from the rear. Thus (from the inverse square law), the spl at the rear of the diaphragm will be only 1/49 or 2% of that at the front. The response has changed from pressure gradient to pressure. In other words, the mic is now behaving like an omni (which is sealed at the rear).

So the ƒ response no longer rises at 6dB/oct but the transformer is still compensating at -6dB/oct, so the overall output gives a bass boost.

Microphones - Single diaphragm vs Dual diaphragm

Basics:

There are two basic operating principals that microphones use:

1. Pressure: closed back = omni polar response

2. Pressure gradient: open back (using slots) = directional polar response

A directional response can be achieved in two ways: allowing sound to enter the rear of a single diaphragm (thereby allowing phase cancellations due to the different front and rear path-lengths), or by using two diaphragms (and matrixing the electrical outputs).

On axis response:

Off axis response:

In more detail:

A. The mid-field response (around 1m)

o SD: this will show considerable proximity at 180º (as the low ƒ response tends toward omni)

o DD: less dip in the 180º response (centred around 750Hz) ie the cardioid pattern is maintained at low ƒ.

B. The far-field response (>3m)

o SD: remains a consistent cardioid down to lowest ƒ

o DD: tends toward an omni pattern, therefore it picks up more bass off axis than the SD

o Both SD & DD omni types will become quite directional at high ƒ

C. The near-field response (0.05 - 0.15m)

o SD: massive bass boost due to proximity effect

o DD: polar response goes to almost super-cardioid (less bass boost)

o SD polar response goes to almost perfect figure 8 at low ƒ.

o DD omnis exhibit a very slight proximity effect

There are two basic operating principals that microphones use:

1. Pressure: closed back = omni polar response

2. Pressure gradient: open back (using slots) = directional polar response

A directional response can be achieved in two ways: allowing sound to enter the rear of a single diaphragm (thereby allowing phase cancellations due to the different front and rear path-lengths), or by using two diaphragms (and matrixing the electrical outputs).

On axis response:

- DD reduces proximity effect (on a unidirectional mic, therefore it is a good choice for close miking)

Off axis response:

- DD directivity will change with frequency, whereas the SD will maintain constant directivity (therefore making the SD a good choice for distant miking)

In more detail:

A. The mid-field response (around 1m)

o SD: this will show considerable proximity at 180º (as the low ƒ response tends toward omni)

o DD: less dip in the 180º response (centred around 750Hz) ie the cardioid pattern is maintained at low ƒ.

B. The far-field response (>3m)

o SD: remains a consistent cardioid down to lowest ƒ

o DD: tends toward an omni pattern, therefore it picks up more bass off axis than the SD

o Both SD & DD omni types will become quite directional at high ƒ

C. The near-field response (0.05 - 0.15m)

o SD: massive bass boost due to proximity effect

o DD: polar response goes to almost super-cardioid (less bass boost)

o SD polar response goes to almost perfect figure 8 at low ƒ.

o DD omnis exhibit a very slight proximity effect

A parallel world

Here is a link to an article by Richard Hulse (RNZ) on parallel compression. Note that this is an advanced technique - make sure you are properly familiar with un-parallel compression first! <http://homepages.paradise.net.nz/rhulse/Side%20Chain/sidechain.htm>

For a practical guide, using Logic Audio, try this Attack Magazine tutorial.

For a practical guide, using Logic Audio, try this Attack Magazine tutorial.

Signal Processing or Effecting?

Sometimes I get asked what is the difference between signal processors and FX (effect units). This used to be fairly clear-cut; signal processing is maintaining or improving the signal quality from a technical perspective, whereas FX are an unessential but interesting addition to the signal. Thus EQ, compression, and gating are examples of Signal Processing. On the other hand delay, reverb, and chorusing would be FX. In the old days, to further clarify the function of rack units, the SPs might be put in one rack and the FX in another. Then multi-effect units came along, and this blurred the line between the two sorts. Now we are using plug-ins where they are selected from menus with little regard for whether they are one or the other. Does it matter... let's face it, for music production every sound choice is really an artistic one, so strict definitions don't always apply. For example EQ can be used correctively (SP?) or creatively (FX?) so ultimately any adjustment is about getting a great sound, which is subjective. And isn't putting chorus on a vocal done to improve the signal? It is just from a listeners perspective, rather than a technical one. That said, it can still be helpful to split these out sometimes; say I have a tom drum sound I am not happy with. First I might filter out unwanted HF and LF noise, then gate the drum to eliminate leakage. Next I EQ to eliminate a ring, then put a second gate in. This time the gate is not to reduce noise, but to alter the envelope of the drum. finally I add some EQ to polish off the new punchy drum sound. So, my signal chain here is EQ (SP), Gate (SP), EQ (SP), Gate (FX), EQ (FX). The order here is important (here is the formula: [Gate + EQ] ≠ [EQ + Gate]), so separating these virtual units mentally can help get the correct order according to the function of each one.

Shunting the signal path

Recently someone asked me what I thought about lowering the input Z at the mic pre to get a better sound from an SM57, and I had to admit that I had never tried it, although I thought there might be some merit in doing so. Now I have and can report the results.

The Shure SM57 is a perennial favorite for recording snare drum and electric guitar. It is also a quite old design now, and was made to work with an input Z of 600Ω (this standard came from broadcasting / telephony). Modern mic preamps have a higher input Z. My test was done on an Audient ASP8024 desk. In the manual it states that the input Z is >1.5kΩ but gives no more information. Given that this is a professional desk and must not compromise quality condenser mics, my guess is that the impedance will be well above 1.5kΩ, probably somewhere around 2-2.5kΩ. In any case to maximise the difference I chose the shunt the signal with 700Ω. This pulled the input Z down to below 500Ω if the desk Z is 1500Ω, but around 550Ω if we assume 2500Ω. First I tracked a snare drum, then electric guitar (clean and distorted). I adjusted the levels to be the same (the shunted mic level drops at bit). There was an obvious difference in sonic quality - noticeable in both snare drum and guitar recordings was a lack of low frequency colouration. This lack of muddiness let the transients come through more. I didn't put a 'scope on to see if the transients were actually improved or if it was a subjective thing due to the lack of masking. In any case, I would say there was an improvement. Could I have got the same results by using EQ on the desk? Now there's an experiment for another day.

Alternative paths to the Mix

Time to do a band mixdown - but where to start? Well, there are fundamentally two ways to mix (this is after all track cleaning etc has been done). One is the drums-up mix, where you start with the drums (or whatever is making the beat) and then add in the other rhythm parts one by one. So in pure terms you solo the kick drum and get a good sound, then add the snare, then the high hat, then overheads & finally toms. At this point check the overall balance of the drums. This assumes that the usual things get done to each part to make them sit in this 'sub-mix' ie EQ, gating, possibly compression. Next you add in the bass, paying particular attention to getting it sounding right with the kick drum. This will normally require some EQing and compression too. Finally add the guitar parts (rhythm, riffs, not lead parts). Often these don't require much signal processing but they will need panned to make way for the vocal. At some point, you need to consider FX too - reverb, delay etc, but later is ok - it is time to set the vocal into this rhythm 'backing track". On a good day the lead vocal will sit ok with what is already done providing some EQ and compression is used. Quite often though it will sink into the mix and require some fairly blunt treatment to make sure it sits in the mix without getting lost, or sounding muddy. It is easy at this point to try and push the vocal to the front of the mix with harsh compression settings and/or upper-mid frequency boosts (this will create sibilance).

So…why not try the opposite approach – the vocal-up mix. Start with just the lead vocal. Consider how it should sound according to the style and tone of the voice, and of course, the song. Vocals are interesting because no two are the same (I say this of some instruments also, but for voices it is absolutely true). A particular voice has what is called a formant (maybe more than one), which is a frequency region that is accentuated. Sometimes this will work in a mix and other times it will work against you, in which case it is a matter of trying to create an artificial formant by boosting some frequencies with EQ. One benefit of doing a vocal-up mix is that you can stay a lot closer to the natural frequency contours of the singer. Lets now take things a stage further and add reverb to the voice. This is where a good vocal on a drums-up mix can get lost. Yes, pre-delay is important, but lets face it – to get that big vocal sound required for a pop song is going to need a lot of reverb, and that is smearing the signal right across the stereo field. The result is often a vocal that is getting a bit lost and/or conflicting with the other parts of the mix for frequencies or space. The big advantage of the vocal-up mix is that you can focus exclusively on these aspects. Everything else will be added once the vocal is firmly established. The other parts are therefore subservient to the lead vocal, which when you think about it is the right way round for a song. If say a guitar part is conflicting with the voice then work on the guitar to make it fit in (by using EQ, panning). FX can then be selected keeping the vocal in mind eg don’t put a big reverb on the guitar (as the vocal already has that), maybe chorusing will work better. Ultimately we want a good meld of the different parts that are wrapped around the vocal. And of course enough clarity so we can hear individual instruments clearly if we listen for them.

While we are thinking about the order of things…tracking has established its own ‘rules’ too. Usually it will be best to record drums, bass, rhythm guitar and guide vocal together first. One of the key benefits of this method is that the players are working together in real-time to build the groove of the song. In particular, there is an important interchange occurs between the drummer and the bass player. They can push and pull each other to make the tempo just right for each part of the song. Furthermore they can play around the beat to create the ‘feel’ or groove of the track. For example the kick and high-hat might be played at the top of the beat, and the snare and bass slightly ahead of the beat. Then at the chorus the snare might be quite late, and the bass slightly after beat too. If either of these instruments (ie bass or drums) is overdubbed then the second part can only follow what is already recorded, without the other person pushing and pulling them to create the groove. It is a bit like shaking hands with yourself – not quite the same. Having said that, when Phil Spector was creating his wall of sound, the drums were the last instrument to get recorded. He used that technique to make some of the greatest records of all time, so I guess it comes down to the skill level of the performers and the producer. Spector had arguably the greatest lineup of session musicians of all time. In almost all cases the lead vocal will be overdubbed, and go down last so the singer can hear the most complete musical arrangement possible. This will allow him /her to get the energy level right for the song.

So…why not try the opposite approach – the vocal-up mix. Start with just the lead vocal. Consider how it should sound according to the style and tone of the voice, and of course, the song. Vocals are interesting because no two are the same (I say this of some instruments also, but for voices it is absolutely true). A particular voice has what is called a formant (maybe more than one), which is a frequency region that is accentuated. Sometimes this will work in a mix and other times it will work against you, in which case it is a matter of trying to create an artificial formant by boosting some frequencies with EQ. One benefit of doing a vocal-up mix is that you can stay a lot closer to the natural frequency contours of the singer. Lets now take things a stage further and add reverb to the voice. This is where a good vocal on a drums-up mix can get lost. Yes, pre-delay is important, but lets face it – to get that big vocal sound required for a pop song is going to need a lot of reverb, and that is smearing the signal right across the stereo field. The result is often a vocal that is getting a bit lost and/or conflicting with the other parts of the mix for frequencies or space. The big advantage of the vocal-up mix is that you can focus exclusively on these aspects. Everything else will be added once the vocal is firmly established. The other parts are therefore subservient to the lead vocal, which when you think about it is the right way round for a song. If say a guitar part is conflicting with the voice then work on the guitar to make it fit in (by using EQ, panning). FX can then be selected keeping the vocal in mind eg don’t put a big reverb on the guitar (as the vocal already has that), maybe chorusing will work better. Ultimately we want a good meld of the different parts that are wrapped around the vocal. And of course enough clarity so we can hear individual instruments clearly if we listen for them.

While we are thinking about the order of things…tracking has established its own ‘rules’ too. Usually it will be best to record drums, bass, rhythm guitar and guide vocal together first. One of the key benefits of this method is that the players are working together in real-time to build the groove of the song. In particular, there is an important interchange occurs between the drummer and the bass player. They can push and pull each other to make the tempo just right for each part of the song. Furthermore they can play around the beat to create the ‘feel’ or groove of the track. For example the kick and high-hat might be played at the top of the beat, and the snare and bass slightly ahead of the beat. Then at the chorus the snare might be quite late, and the bass slightly after beat too. If either of these instruments (ie bass or drums) is overdubbed then the second part can only follow what is already recorded, without the other person pushing and pulling them to create the groove. It is a bit like shaking hands with yourself – not quite the same. Having said that, when Phil Spector was creating his wall of sound, the drums were the last instrument to get recorded. He used that technique to make some of the greatest records of all time, so I guess it comes down to the skill level of the performers and the producer. Spector had arguably the greatest lineup of session musicians of all time. In almost all cases the lead vocal will be overdubbed, and go down last so the singer can hear the most complete musical arrangement possible. This will allow him /her to get the energy level right for the song.

Focusrite Liquid Channel review

I reviewed this great piece of gear soon after it came out (NZ Musician magazine, June 2005). Now I see it is finally getting the recognition it deserves. It has won the SOS 'best mic pre' award two years running (2011 and 2012). You can read about it here.

| liquid_channel_review.pdf | |

| File Size: | 242 kb |

| File Type: | |

Eddie Offord: Engineer-Producer

Eddie Offord engineered and/or produced two of the best bands ever - ELP, and YES. Here is my tribute to the great man.

| eddieofford.pps | |

| File Size: | 3290 kb |

| File Type: | pps |